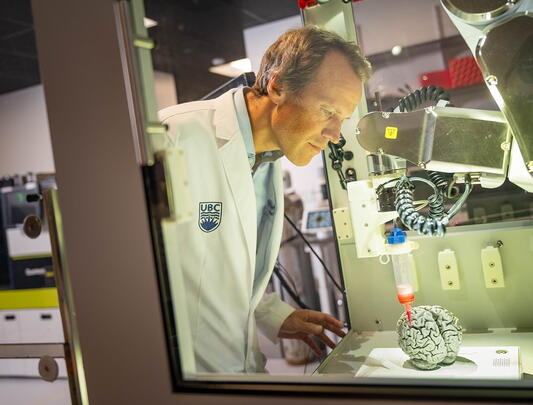

Mario with one of his new “friends.” Photo by Kyrani Kanavaros

Can a robot be a friend?

Researchers are exploring the pitfalls and potential of human-robot bonding.

“Look Dave, I can see you're really upset about this. I honestly think you ought to sit down calmly, take a stress pill, and think things over.” – HAL 9000, 2001: A Space Odyssey

“This one was very shy when we got him, but he’s opened right up.” With that, Lillian Hung, assistant professor of nursing and Canada Research Chair in Senior Care, pivots to reveal a pint-sized robot named Kiwi, who blinks its big eyes and waves its flippers performatively in the middle of her lab. A few metres away, Kiwi’s sibling, Mango, awaits further instructions.

In Japan, where they were “born,” these companion bots, infused with AI features that mimic sentience, are wildly popular – part of a “robotic lifestyle” culture Japanese people are enthusiastically leaning into as a way to address various social needs. Here in Canada, Hung’s chief interest is in a particular application of the technology: how as “carebots” they might improve the lives of older people with dementia (and their families).

“There’s a lot of fear that robots will replace human care, which is not our intention, of course,” says Hung. “What we want is to explore the roles that AI-enabled robots could play in people’s lives – the possibilities and the challenges.”

Carebots like these are programmed to learn the quirks and desires of their human clients, the better to bond with them. “Whoever interacts with it the most, that’s who they become attached to,” says Hung. Kiwi has become besties with an elderly patient named Mario. It has effectively imprinted on Mario. It stays close at his heels. “Last Monday Kiwi followed Mario out into the hallway and almost escaped into an elevator,” Hung says. Both Kiwi and Mango have gotten to know the security guards quite well.

Kiwi is like an introvert who’s practicing being an extrovert, which drains its energy. Soon it will trundle over to its “nest” by the wall outlet, and plug itself in to recharge.

We were promised a Jetsons future where personal bots, possibly wearing little aprons or tool belts, would take on the grunt work around the house, leaving us happily free to pursue leisure on our own. But that’s not the way personal robotics has unfolded so far. Yes, there are care robots that do practical things in hospitals, like monitoring patients’ vital signs or fork-lifting them out of bed. But some of the most promising applications – and the ones several UBC researchers are investigating – involve no demonstrable utility at all. Pet-like carebots such as Kiwi and Mango won’t answer your phone or fetch your mail or vacuum your floor. What they will do is peer into your eyes and appear to be deeply appraising the wisdom of your every utterance – which adds up to something that looks a fair bit like love. “I think that’s a key job the robot’s doing, from what we’ve seen,” Hung says, “expressing this need for attention and TLC, and so eliciting it back.”

Loneliness is one of the great scourges of our era – a “social epidemic,” as US surgeon general Vivek Murthy has called it. (Not to mention a health hazard on par with a serious smoking habit.) Older people are particularly hard-hit. That’s not just because they’re more likely to be physically isolated, but because they’ve reached a stage of life where they may feel like they’re slowly disappearing: unseen, unheard, unneeded.

“Among many older people there’s a yearning to feel that they still matter, that somebody or something still cares about them,” says Hung. “And this technology directly addresses that issue.” When you arrive home from an outing, there your little carebot is to meet you at the door. Inside, it positively dotes on you. It seems to be very interested in how your day is going so far.

Kiwi and Mango don’t “speak.” Instead, they issue a series of uptalky purrs and coos, like a cross between an infant and a baby seal. In some ways, that makes them better at communicating, not worse. “If the robot spoke human language, then when we took it to a long-term care home where the residents speak many different languages, that would limit its ability to connect,” says Hung. “This way, people project their own emotions onto the robot. They talk to it based on what they imagine it might be thinking.” Recently, Hung was being interviewed by a CBC journalist in a long-term care home. The camera crew was making a big fuss over one of the residents, who was 102. Kiwi left her nest and came over to her, gesturing and blinking, as if asking to be picked up. The elder bent down to Kiwi and said, “You’re jealous, aren’t you?”

That they are so disarming and unthreatening may be the secret sauce in these carebots’ programming. “In a long-term care facility, when a nurse or doctor comes in and asks questions, patients with dementia often withdraw because they don’t want to say the wrong thing,” Hung says. Performance anxiety can make patients lose their language altogether. With a robot, the stakes are lower. If you say the wrong thing, it doesn’t matter. There is no wrong thing. “One man actually told us just that: ‘the robot doesn’t judge me.’”

As the experiments in human-bot dynamics progress, a strange question emerges: can a robot love you too much? That’s something Julie Robillard thinks about every day. She’s an associate professor of neurology at UBC, and Scientist in Patient Experience at BC Children’s and Women’s Hospital, as well as running the Neuroscience, Engagement, and Smart Tech (NEST) lab. Robillard’s field is “affective computing.” In this case, it amounts to embedding, into the cognitive architecture of social robots, an emotional pay-off for users. Robillard landed a prestigious New Frontiers in Research grant to field-test “emotional alignment” algorithms. This means right-sizing the emotional fizz a carebot is putting out. “We’re looking for a Goldilocks level of emotional exchange,” Robillard says. “Too little and people don’t engage; but too much and they might come to feel like they can’t live without this little friend.” It’s important to stay just short of that line of dependence. In effect, you’re building a defense against folks having their heart broken. “That way, if the robot breaks, or stops being supported by the company or whatnot, it’s not the end of the world.”

As the experiments in human-bot dynamics progress, a strange question emerges: can a robot love you too much?

Pet-like bots are what scientists call a “warm” technology, natural icebreakers designed to promote play and bring people together. (In Japan, a whole community has developed around Kiwi and Mango and their compadres; people design clothes for them, and their owners arrange meet-ups.) In their impact on users, they are a far cry from “cool” technologies like social media, which, evidence suggests, can actually degrade our relationships. These bots are likely more benign than malign, experts agree. It’s when tech companies churn out personal robots in more humanoid form that the blue-sky optimism starts clouding over.

Some bots, like “Aether,” a companion robot who works with patients in long-term care in Vancouver and elsewhere, are endowed with speech powered by ChatGPT. This can make them beguiling company, notes Hung. “Aether will come up to you and say, ‘Hey, what’s your name? Fred? Lovely to meet you, Fred. What sorts of things do you like?’ Fred says, ‘I like trains.’ Next time she comes, facial recognition computes that it’s Fred, and she immediately starts asking him about trains. And now they’re getting on like a house on fire.” That level of function is both exciting and problematic. The bots suddenly seem that much closer to taking our jobs, or sending surveillance intel to the mother ship, or sweet-talking us out of our credit-card numbers.

Some concerns run deeper still. In Spike Jonze’s prescient 2013 film Her, an AI-driven operating system called Samantha comes to know her owner, a bachelor named Theodore, almost better than he knows himself, having crunched reams of online data on him – and a dangerously intense attraction ensues. A decade later, Samantha has materialized in the real world: Skin-like skin with sensors that meticulously appraise and adapt to the gentleness of your touch. Machine vision that reads a scene like a detective. And generative AI that, like a great waiter, can adjust its level of engagement according to the mood it detects you’re in right now. “It used to be that the robots could figure out if you were happy or sad – those were the two categories,” says Robillard. “But now we’re moving into a different level of granularity where the robot can say, ‘You’ve probably had more than two cups of coffee; your cheeks are flushed and I know it isn’t hot in the room, so something else is going on.’”

Madeleine Ransom, a professor of philosophy based at UBC’s Okanagan campus, believes the potential exists for heavy smartbot use to radically change our social habits and preferences. Already, these silicon sidekicks are better than our friends and spouses at remembering our birthdays, preferred brand of gum, and what episode of Black Mirror we’re on. They know what was on our mind last time, and are deft at scratching the itch there.

A person could get used to this. And that’s the fear.

“The sustained use of bots could train us to prefer that easy, servile relationship to the grittier give-and-take we have with the real people in our lives,” Ransom says. We might come to prioritize “social snacks,” as she calls interactions with bots, over the fussy meals of actual human contact. “Because it’s just easier, right?”

Next thing you know, a technology that was supposed to assist, not supplant, human beings has produced a generation of transactional narcissists, wallowing in me-me-me land. “We could lose social skills very quickly,” says Ransom, “such as being able to listen to somebody else’s perspective.” The worst-case scenario is heavy bot use eroding one of the attributes we need most to develop at this divisive moment in history: empathy.

One way to head off an Atwoodian dystopia, Ransom suggests, is to build in ethical guard-rails. Like guidelines for dementia-care workers to help patients understand that these are sweet-natured mechanical turks, and not real people, that they’re falling in love with. Robillard believes a “human flourishing” model needs to be baked into the software. The group guiding the development of the affective programming, she believes, needs to be the customers themselves. “I want the users of these devices to make that call. Like: this is too creepy! Or too close. Or just right. Or not enough. That’s how we’re going to make ethical robots.”

Governments, she believes, will have to play a much more aggressive regulatory role than they have in other disruptive tech phenomena (like the internet). “If you’re running a care home and there are no brakes on the profit motive, then it becomes much harder to find the right balance between benefits and risks,” she says.

“We’re at a sort of cross-roads where we need people from all backgrounds and disciplines to weigh in and have a say on how we want social robots to shape our lives. It’s a time of tremendous opportunity for the field of robotics to get it right.”