Changemakers

“Privacy has gone mainstream”

As AI becomes embedded in everyday lives, Elizabeth Denham has helped place personal data security high in the collective consciousness.

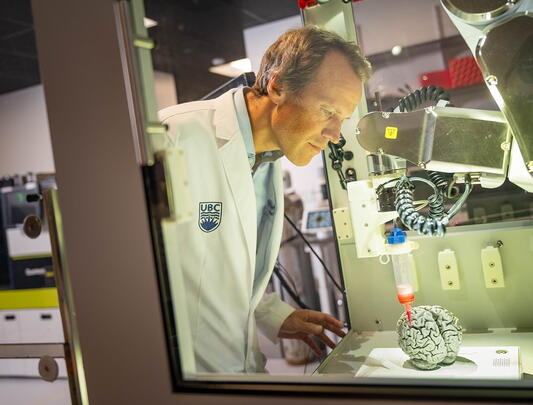

Elizabeth Denham. Photography by Tegan McMartin

Name a major technology trend in the past 20 years – from social media to Google Street View to online gaming – and Elizabeth Denham has been leading the charge to safeguard our data from the companies behind them.

If you’ve ever wondered how much data social media giants are storing and perhaps even sharing with third parties, Denham has been there, tackled that, fined that company. She’s battled mainstays in Big Tech, such as Facebook and Google, and spent countless hours devising ways to mitigate the harms caused by eroding privacy rights.

“I’ve always sat at the intersection of technology and society in my career,” she says. “While Silicon Valley giants have connected us in such a world-changing way, we’re dealing with the kinds of technologies that could be a boon or destructive force for us.”

Her government positions have given Denham a vantage point few ever experience. She was Assistant Privacy Commissioner of Canada from 2007 until 2010, when she was appointed Information and Privacy Commissioner for British Columbia. Six years later she moved overseas to take on the role of UK Information Commissioner.

During her five-year term there, she investigated political consulting firm Cambridge Analytica and its business relationship with Facebook. She interviewed whistleblowers who claimed that the Facebook profiles of millions of people had been collected via an app without consent and used to influence the outcomes of political campaigns, notably those of Donald Trump and Ted Cruz in the US. Her office seized Cambridge Analytica’s servers, eventually prosecuting the company and fining Facebook £500,000 (the highest fine available to her office at the time) for failing to keep the personal information of its users secure.

“Revelations around misuse of data by social media companies, political parties, and data brokers were deeply concerning to many people,” Denham says. “Increasingly accurate micro-targeted messages based on inferences of our political leanings were threatening free and fair elections.”

Denham has long taken an interest in social and political issues. Growing up in Richmond, BC, she learned about the civil and women’s rights movements, and voraciously read books on politics, history, and foreign affairs. She was close to her grandfather, Donald Denham, who served as a deputy minister for the BC Government, and was influenced by his interest in public service. At UBC, she studied history and political science before earning a master’s in archival studies. Her passion for history led to a position as Chief Archivist for the City of Calgary.

As her career evolved from managing historical data into safeguarding private data, Denham became as mindful of the future as she had been attentive to the past. She was acutely aware of the fundamental social change being driven by ever more pervasive applications of artificial intelligence – and of her own role in shaping its trajectory.

“We are at a defining moment, which requires a sense of responsibility and a long-term view,” she said in a speech at the Alan Turing Institute, shortly after the Cambridge Analytica scandal broke in 2018. “Future generations will thank us if the way in which we develop artificial intelligence today looks at the true value it can deliver while respecting data protection and ethical principles.”

As a result of the ICO investigation and multiple others, practices have improved, says Denham today. “This is true across the tech sector. Privacy and data security are key to users’ trust. The concerns of consumers and the focus of lawmakers have dramatically shifted in the past few years; privacy has gone mainstream.”

Denham has also tackled government transparency, looked into the fundraising practices of charities, and spearheaded a “first-of-its-kind” framework for a code of practice to guide the use of Facial Recognition Technology. But she is particularly satisfied by the guardrails she helped place around children’s online privacy. Children have been exposed to new and serious risks, says Denham. Their data can be used to deliver inappropriate content, including pornography, violent images, and references to self-harm. Their profiles and location data can expose them to online predators. And the addictive nature of gaming and video streaming have helped behavioural advertising to commodify them.

In 2020, she moved decisively to implement the Children’s Code, a set of enforceable standards for online services intended to prevent the exploitation of children’s data and keep them safe. The code’s recommendations include items such as making sure location-sharing options are turned “off” by default.

“The most important, overarching standard in the code is that companies must consider the best interests of the child in their design of services,” says Denham, grandmother of three. “It is unlikely that the economic interests of a company will trump the best interests of a child.”

The code has since been replicated in countries such as Ireland, the Netherlands, Denmark, and the State of California. Canada, however, has yet to implement one. Denham addressed this lack in a guest op-ed published in the Globe & Mail in October 2022, closely coinciding with the 10th anniversary of the death of Port Coquitlam teenager Amanda Todd due to online bullying and sexual extortion. “Reasonable, even obvious, modifications like these are long overdue,” she wrote. “And they have yet to be implemented universally. We must act urgently and thoughtfully. Lawmakers from around the world should work together to keep children safe in the digital world they have inherited.”

While she has public interest at heart, Denham has not always worked in a regulatory capacity. When Canada enacted new privacy laws in the late 1990s, she set aside her archivist career and started a consultancy to help enterprise firms navigate stricter standards for the management of customer data. “It was a booming business to be in and all these issues were so interesting to me,” she says. Today, she is back in BC and has returned to consulting as an international advisor on data and technology for the law firm Baker McKenzie.

She continues her fight for children’s privacy rights online with her role as a trustee of 5Rights, a children’s online safety charity, and remains vigilant about the evolution of AI.

“AI and applications using personal data cross a boundary between predicting human behaviour with increasing accuracy and nudging our behaviour in ways that risk our ability to decide for ourselves what is best,” she says. “Will the machine decide our social benefits, our children’s educational streams, the politically targeted messages and the news we receive? The scale and speed of adoption of AI is moving ahead of the laws and regulations that ensure there is a human in the loop. There is an urgency for governments to act now to preserve our future.”