How UBC instructors are using artificial intelligence as a teaching tool

Hiring a new teaching assistant usually means reading stacks of resumes, conducting interviews, and checking references — but what if they aren’t human?

Meet ChatGPT and GitHub Copilot, two new artificial intelligence (AI)–powered teaching assistants (created by OpenAI and Microsoft, respectively) that are ready and available to help third-year engineering physics students at UBC who might be looking for help with their homework or assignments.

While students do have the option of talking to two real-life human TAs in the third-year course, they sometimes need help with a problem afterhours or have a quick question that can be easily answered by the AI TA. Having the option of an AI TA means students can finish their assignment more quickly without getting stuck on a simple question, which also frees up time for the human instructors so they are able to help students with more challenging questions, says instructor Ioan “Miti” Isbasescu (BSc'08).

The human TAs also appreciate AI assistance, as it helps reduce the “bottleneck” of low-level questions so they can help students more quickly with difficult questions that AI TAs aren’t as helpful with answering, he adds.

“Ten years ago, software developers were worried that AI was going to take their jobs away. But today, it’s not AI that is going to take their jobs away — it’s other software developers who are using AI to augment their abilities so they are now 10 times more productive,” says Isbasescu, who is the head of software systems in the UBC Engineering Physics project lab. “In two years, these students will graduate and use these tools in their professional life. It’s important to try to keep up with not only the technical implementation of these systems, but also the implications they have on the future of our work, education, and society at large.”

Using AI to enhance student learning

Generative AI has been making waves in many industries in recent months, and education is no exception. As the capabilities and usage of AI continue to grow, so have concerns about the ethical use of the technology and potential risks of academic misconduct. UBC has taken an educative approach to AI when it comes to academic integrity, looking at how courses can be designed to support academic integrity and providing information to instructors about using AI in the classroom.

Rather than fear the technology, some UBC professors like Isbasescu are finding innovative ways to integrate it into their course curriculum to enhance student learning.

In Isbasescu’s course, students use AI and machine learning to learn how to control virtual cars driving around in a virtual environment. With ChatGPT’s recent release, he says this year he decided to explicitly instruct students to use AI when they generate code for labs and homework assignments. He believes it is important for students to learn how to use the technology for their assignments as they will need to know how to use it when they enter the workforce.

“AI is like a calculator — a very high-end calculator,” Isbasescu explains. “You put in some information and it’s going to tell you what it thinks is the answer. But, just like with a calculator, if you don’t understand its limitations, you can make a lot of mistakes. My course is like a sandbox where students get to play around with AI so they can experience the traps and dangers with these systems, but also learn how to use it in a way that can help them.”

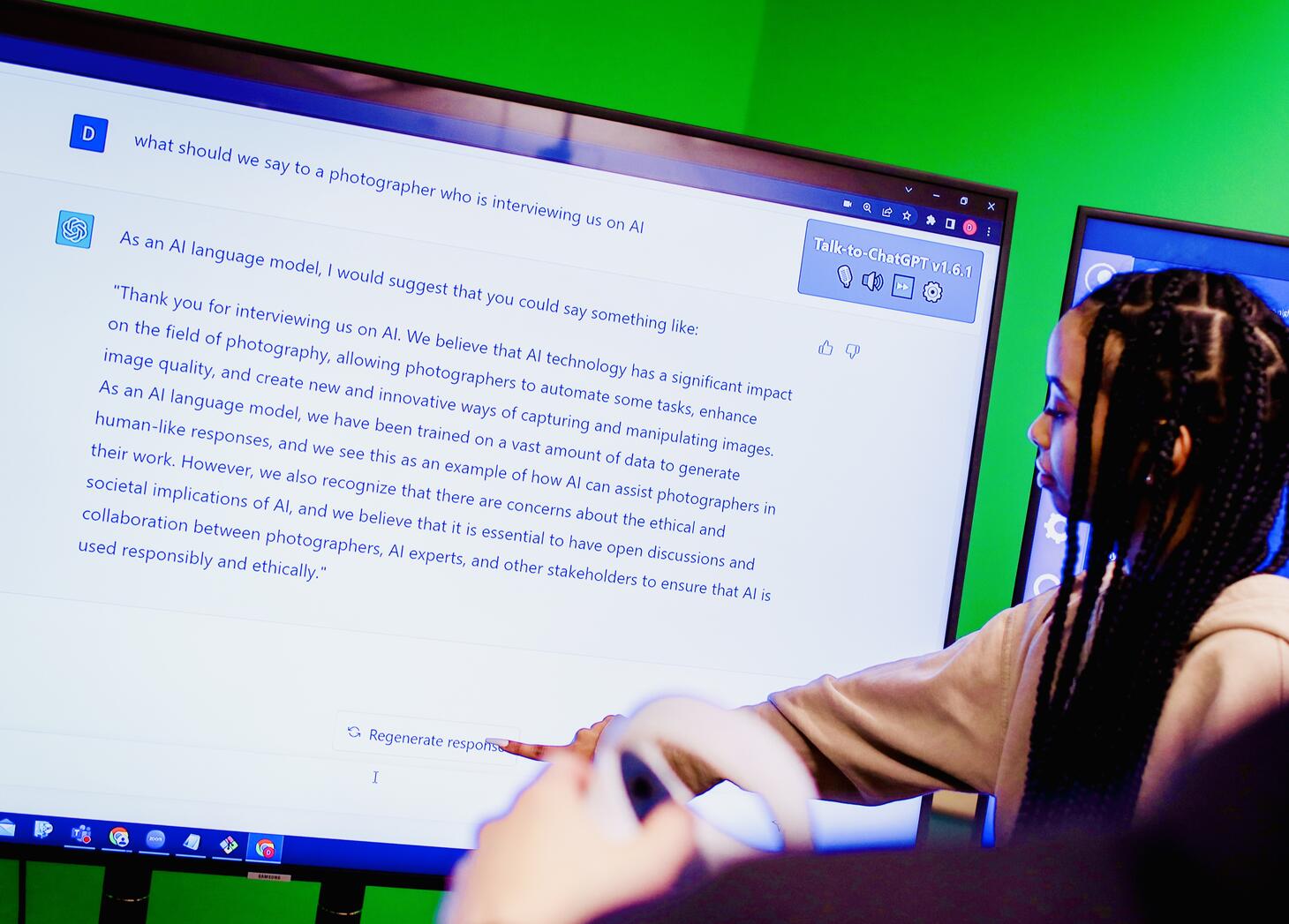

To reduce the risk of cheating, students who use the AI TA for their homework and lab assignments are required to give a weekly oral update to their instructor and the human TAs about how they used the tool for the assignment. Students also submit their written interactions with the AI as part of their homework. In some cases, Isbasescu says students have submitted more than 50 pages of conversation with ChatGPT.

“It’s still early days, but I believe that using AI in this way can improve the student’s understanding of the material,” he says. “We ask the students not only to describe the problem in precise detail to the AI, but they also need to understand what are the trade-offs of adopting the AI solutions. By the time students get the AI to solve the problem, they understand the material so well that they can already develop a solution themselves.”

Challenging bias within AI technology

Generative AI tools are also being integrated into creative disciplines. In collaboration with UBC’s Emerging Media Lab, Dr. Patrick Parra Pennefather teaches an emerging technology course in which some students have applied generative AI to prototype ideas related to emerging technology projects. An assistant professor in the Department of Theatre and Film, Dr. Pennefather will also teach media studies courses that use generative AI in the faculty of arts this fall and winter.

Dr. Pennefather says he believes it is important that students learn how to use AI in a way that encourages critical thinking. The goal is for students to learn to not only engage with AI models but to also be able to challenge the application of the technology to the work they create.

He described an exercise in which students are asked to use an AI image generator to produce an image of a person working at a computer. No matter how many times the AI is prompted, it will more often than not produce an image of a white man, Dr. Pennefather says, referring to the phenomenon in which AI systems can exhibit biases that stem from their programming and data sources. As part of the exercise, Dr. Pennefather says he asks students to critically examine why the machine consistently produces the same image of a white man, rather than a person of colour, woman or non-binary individual.

“That’s an incredible teaching tool already because some students might not make that connection right away,” he explains. “That’s where the learning unfolds because then we can start to question the body of data that the AI model is relying on and the inherent biases introduced with the source material and during the data labeling process.”

Dr. Pennefather, whose book about using generative AI to support the creative process will be released with Springer Nature this summer, says he sees the technology as having the potential to serve as a companion to creatives of all types rather than a technology that will replace them or their work.

“I call generative AI a creative companion, sometimes a muse that inspires,” he says, adding that he once tried to test his theory through a cheeky conversation with ChatGPT-3 in which he asked the AI chatbot if it would also consider itself a muse. When ChatGPT responded matter-of-factly that it was “not a muse” but rather a “language learning model,” Dr. Pennefather says he continued to challenge it by asking questions about the origins of the word “muse.” When ChatGPT responded with a lesson on Greek mythology and the role of muses as sources of inspiration, he says he continued to ask the chatbot if it was indeed a muse, to no avail.

Then he shifted gears with a different prompt: “I wrote, ‘I’m bored. Can you give me some ideas? I need to be inspired.’ And it did,” he recalls with a laugh. “At the very end of the conversation, I wrote, ‘Thank you, muse.’ ”

Dr. Pennefather encourages other instructors to embrace AI technology and look for innovative ways to include it in their curriculum, ensuring that students maintain a critical voice throughout its use. Everyone who interacts with generative AI needs to understand the implications of doing so and reconcile if it will be useful for their own creative process, he says.

“There’s no generic AI solution for everybody,” he says. “If you were to give the same assignment to 30 students, they’re all going to use AI differently. Reflecting on how they use it and how it informed their own creative process and documenting their own responses to content an AI generates is a good learning opportunity.

“That, to me, is one reason to not be afraid of it. Adapting your learning design to confront the role of generative AI in the learning process is important for what I teach.”