An artificially intelligent floor-cleaning robot at work on UBC’s Vancouver campus

Robots need not apply

Artificial intelligence is reshaping our world of work, but it’s emotional intelligence that will be a key component for thriving in it.

Fifteen years ago, Facebook was in its infancy, Twitter was being launched, and nobody had an iPhone. Jobs now considered common – social media manager, Uber/Lyft driver, Blockchain analyst, cloud architect, data scientist (the list goes on) – did not exist. Neither did Instagram or TikTok.

Technology and the world around us continue to evolve exponentially, and within a decade we will probably be using technologies that have yet to be invented. For that reason, I do not think we can predict the job market much beyond the next five to 10 years. But what we can say with certainty is that rapid change is our new normal.

Full disclosure: I do not consider myself a subject matter expert on “the future of work,” merely a synthesizer (some would say hoarder) and translator of information. There are thousands of reports, articles, videos, podcasts, and books on this topic, and a Google search produces well over a billion results.

Perhaps this is an indication of the anxiety around advancing technologies disrupting the world of work and the stability of occupations. But while there’s little doubt that digital literacy will be – already is – essential, there is also every indication that it’s the ability to leverage their uniquely human qualities that will give workers the edge in a high-tech future.

The Fourth Industrial Revolution

We cannot discuss the future of work without touching on the fourth industrial revolution. Klaus Schwab, founder and executive chairman of the Geneva-based World Economic Forum (WEF), published his book The Fourth Industrial Revolution in 2016 and coined the term at the Davos meeting that year.

The first industrial revolution used water and steam to mechanize production, the second used electric energy to create mass production, and the third used electronics and information technology to automate it, says Schwab. And the world fundamentally changed each time, although it required decades to adapt.

In contrast, the fourth industrial revolution uses more advanced technologies, such as artificial intelligence (AI), and a dramatic increase in computing power and data to bring about rapid change that is both complex and unpredictable. “It is characterized by a range of new technologies that are fusing the physical, digital, and biological worlds, impacting all disciplines, economies, and industries, and even challenging ideas about what it means to be human,” says Schwab and the WEF. “In its scale, scope, and complexity, the transformation will be unlike anything humankind has experienced before.”

AI: friend or foe?

AI is already influencing our everyday life, reshaping our digital and financial worlds in ways we barely register anymore. Ever find yourself going down the rabbit hole of endless YouTube videos? Why does Amazon seem to know what you want to buy before you do? Amy Webb in her book The Big Nine summarizes the current world of AI well:

“Artificial Intelligence is already here, but it did not show up as we all expected. It is the quiet backbone of our financial systems, the power grid and the retail supply chain. It is the invisible infrastructure that directs us through traffic, finds the right meaning in our mistyped words, and determines what we should buy, watch, listen to and read. It is technology upon which our future is being built because it intersects with every aspect of our lives: health and medicine, housing, agriculture, transportation, sports, and even love, sex and death.”

According to Fortune Business Insights 2021, the global AI market is expected reach $360.36 billion by 2028 (in 2020 it was valued at $47.47 billion). But AI is proving to be a double-edged sword – with very sharp edges – and many experts are calling for tighter regulations and global cooperation in AI deployment and development, as it will have a long-lasting effect on society.

Some major risks associated with AI that everyone should be aware of include social manipulation (I think we all still vividly remember the Cambridge Analytica scandal); autonomous weapons; invasion of privacy – a prime example being China's social credit system, which is expected to give every one of its 1.4 billion citizens a personal score based on how they behave; and data bias (for example, certain populations being underrepresented in the data used to train AI models).

For many people, AI is scary. Will the world of the future be ruled by robots? And as far as the future of work goes, will they automate us out of our jobs? Although automation has dominated the discussion of AI and the future of work for the past decade, another scenario I find far more dire is a class-based divide between the masses who work for algorithms, a privileged professional class who have the skills and capabilities to design and train algorithmic systems, and a small, ultra-wealthy aristocracy who own the algorithmic platforms that run the world (as described in Mike Walsh’s book The Algorithmic Leader: How to Be Smart When Machines Are Smarter Than You). The key solution to this issue is education designed for the 21st century.

It should also be noted that AI affects work in ways besides automation. For example, AI is already involved in selecting candidates for jobs. A couple of years ago, The Washington Post published an article (“A face-scanning algorithm increasingly decides whether you deserve the job”) on a recruitment system that “uses candidates’ computer or cellphone cameras to analyze their facial movements, word choice and speaking voice before ranking them against other applicants based on an automatically generated ‘employability’ score.” It caused a big discussion in the industry, and the article noted that some AI researchers regard the system as “digital snake oil – an unfounded blend of superficial measurements and arbitrary number-crunching that is not rooted in scientific fact. Analyzing a human being like this, they argue, could end up penalizing non-native speakers, visibly nervous interviewees or anyone else who doesn’t fit the model for look and speech.”

Only time will tell if technologies like this become a norm in hiring and recruitment. We have certainly seen new trends; for example, many large organizations are using Applicant Tracking Systems (technology that sorts and highlights résumés before the hiring manager or any other human even has a look) and various online assessments when making their hiring decisions.

The main concern for a lot of people, however, remains the question of the security of their chosen profession: will it (and they) become obsolete?

There is every indication that it’s the ability to leverage their uniquely human qualities that will give workers the edge in a high-tech future.

The Luddite fallacy

Reports of the death of human jobs have often been greatly exaggerated. This phenomenon is called the Luddite fallacy, in reference to a group of 19th century textile workers who smashed the new weaving machinery that made their skills redundant.

In reality, technology has created more jobs than it has wiped out. In its Future of Jobs Report 2020, the World Economic Forum estimates that 85 million jobs will be displaced while 97 million new jobs will be created across 26 countries by 2025. Newer research adds the caveat that, while there might be more jobs in the long term, in the short term there will be losses. And according to McKinsey research, as many as 1 in 16 workers may have to change occupation by 2030, with job growth more heavily concentrated in high-skill jobs.

Workers should be prepared to frequently shift their career trajectory and keep upskilling. I still think that nobody said it better than the futurist Alvin Toffler: “The illiterate of the 21st century will not be those who cannot read or write, but those who cannot learn, unlearn, and re-learn.”

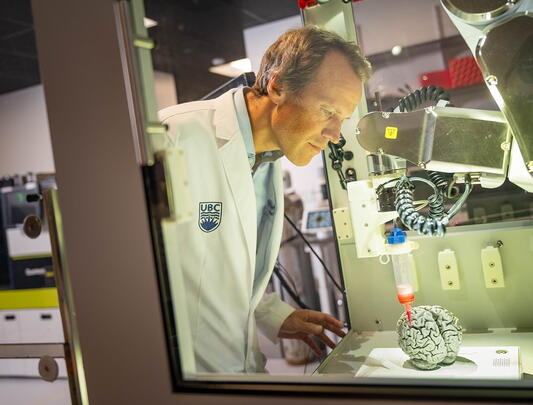

Since not all existing occupations are created equal, which are most at risk of becoming obsolete in the fourth industrial revolution? There is an interesting book called Rise of the Robots: Technology and the Threat of a Jobless Future written by futurist Martin Ford, who explains that the jobs most at risk are those that are routine, repetitive, and predictable. AI has already produced self-driving cars, robots that clean buildings (UBC has one), and even a robot that can pick berries.

Some commentators suggest that we are single-digit years away from AI that is much more intelligent than humans. A well-known survey of machine learning researchers (the results of which were published in the 2018 paper When Will AI Exceed Human Performance? Evidence from AI Experts) predicted that AI will outperform humans this decade in tasks such as translating languages (by 2024), writing high school essays (2026), and driving trucks (2027). Other tasks will take longer: working in retail (2031), writing a bestselling book (2049), or working as a surgeon (2053). In fact, the experts predicted a 50 per cent chance of AI outperforming humans in all tasks in 45 years and of automating all human jobs in 120 years.

For workers trying to predict the more immediate future, it might be more helpful to think about job categories than specific job titles. Ford’s book (and loads of additional research) identifies three main job categories that are likely to survive. The first category covers jobs that require genuine creativity. These are not only roles we associate with the arts, like acting or games development, but also roles that require creative thinking – for example in developing a new strategy for your company, or launching a new product. Another category is jobs that are highly unpredictable. And a third covers jobs that require building complex relationships with people – as leaders and managers, doctors, social workers, teachers, career and leadership coaches. Lots of reports generally show us that the 21st century worker is a relationship worker. Robots need not apply.

The human touch

This phenomenon is echoed by the growing value placed on soft skills by hiring professionals. They seek employees who exercise empathy, are highly emotionally intelligent, and have the ability to communicate and collaborate effectively. A global survey of 5,000 human resource professionals and hiring managers conducted by LinkedIn, combined with behavioural data analysis, found that 80 per cent considered soft skills to be growing in importance for business success, and 89 per cent thought that a lack of soft skills was actually a sign of bad hires in their organizations.

The human-centric approach is being elevated, and, if anything, COVID has accelerated this workplace trend. During the pandemic, many have experienced challenges with mental health, and more and more organizations are putting the well-being of their staff front and centre. Mental health support will be the new normal, and organizations who do not provide support in this area will not be as sought after by job seekers. In fact, employees are more vocal than ever before about their employers paying attention to more than just profit. They want to see social engagement; equity, diversity, and inclusion; and investment in the green economy.

Employers need more from their people than ever before if they're to stay relevant and competitive. And similarly, employees expect more – even demand it – from the organizations they work for. Artificial intelligence is a potent force that is rapidly reshaping our world of work, but it’s emotional intelligence – together with skills in critical thinking, problem solving, and how we govern the roll-out of AI from an ethical perspective – that will enable both businesses and individuals to successfully navigate the change.